Test Run Overview Page

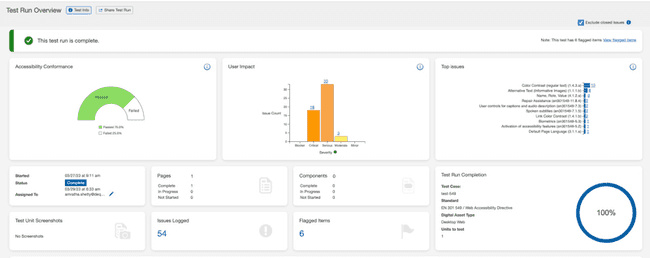

The Test Run Overview screen is a dashboard that gives you a quick snapshot of the current state of your test run. Depending on the status of test run, information provided includes summary statistics (only available for completed test runs) and a snapshot of test units and issues. Overall test run completion status is also available.

The top section of the Test Run Overview screen displays summarized information for a test run (this includes combined Components and Pages statistics).

The following features are some of the important points for you to understand:

The first section includes buttons that you can use based on the status of the Test Run: In Progress or Completion. A checkbox, Exclude Closed Issues is also included in this section.

-

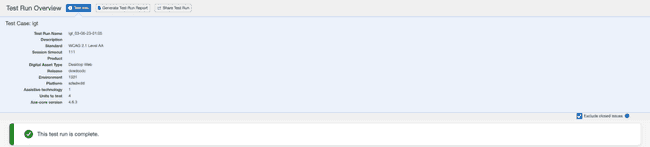

Test Info button: Expands a handy reference section of information below that displays additional details about this Test Run, such as its Description, Standard, Session, timeout, Product, Release, Environment, Platform, Assistive technology and Units to test.

-

Generate Test Run Report button: Use the button to view and print an HTML report for completed test runs. Clicking the Generate Test Run Report button brings up a confirmation dialog box where you decide whether to generate the report with or without closed issues.

Note: The Generate Test Run Report button does not appear in the Test Run Overview page when the testing standard is not WCAG.*

-

Share Test Run: This button is available to you only if your administrator has enabled it for you in the Admin Settings page. Clicking the Share Test Run button brings up a dialog box where you can use the URL provided to copy and share the URL with both Auditor and non-Auditor users. Non-Auditor users can only view the data in the URL link.

Note: Users cannot view test run details using the URL if the test run has been archived.

- Exclude Closed Issues: Select this checkbox to exclude closed issues from accessibility conformance, issues by severity, checkpoints, and the total number of issues in the Test Run Overview page.

The next section includes three panels with the following information:

-

Accessibility Conformance: Accessibility Conformance graph reflects your product's conformance with accessibility standards and guidelines. It displays the number of assessments that are passed or failed based on your selection: Success Criteria or Deque Checkpoints. The default view is Success Criteria.

Note: The number of Deque Checkpoints depends on the standard and conformance level selected for the assessment. Another important point to note is that if the assessment includes 50 WCAG Issues but ALL are associated with 1 Deque Checkpoint, the Overall Accessibility Conformance % appears to indicate a high level of conformance. However, if the 50 Issues are Serious or Critical in nature, and affect multiple pages, the website or application may be unusable for users with disabilities. When determining the overall Accessibility Health and Level of Effort for Compliance, please refer to all data points. It only takes one "High Risk" User Impact Issue to increase your vulnerability to legal action.

-

User Impact: By default we associate each identified Issue with a user Impact category: Blocker, Critical, Serious, Moderate, and Minor. Blocker, Critical and Serious issues are considered "High Risk" with greater effect on usability and greater vulnerability to legal action. When determining an assessment's overall Accessibility Health this data should hold significant weight.

-

Blocker: This issue results in catastrophic roadblocks for people with disabilities. These issues will definitely prevent them from accessing fundamental features or content, with no possible work arounds. This type of issue puts your organization at high risk. Prioritize fixing immediately, and deploy as hotfixes as soon as possible. Should be extremely rare.

-

Critical: This issue results in blocked content for people with disabilities, and will definitely prevent them from accessing fundamental features or content. This type of issue puts your organization at risk. Prioritize fixing as soon as possible, within the week if possible. Remediation should be a top priority. Should be infrequent.

-

Major: This issue results in serious barriers for people with disabilities, and will partially prevent them from accessing fundamental features or content. People relying on assistive technologies will experience significant frustration as a result. Issues falling under this category are major problems, and remediation should be a priority. Should be very common.

-

Moderate: This issue results in some barriers for people with disabilities, but will not prevent them from accessing fundamental features or content. Prioritize fixing in this release, if there are no higher-priority issues. Will get in the way of compliance if not fixed. Should be fairly common.

-

Minor: Considered to be a nuisance or an annoyance bug. Prioritize fixing if the fix only takes a few minutes and the developer is working on the same screen/feature at the same time, otherwise the issue should not be prioritized. Will still get in the way of compliance if not fixed. Should be very infrequent.

-

-

Top Issues: The Top Issues data is generated to quickly identify the top accessibility issues (with the largest remediation impacts) detected based on either Deque Checkpoints or Success Criteria. This data is typically utilized when prioritizing training and accessibility knowledge gaps.

The bottom part of the section includes the following information:

- Started field: The date and time testing progress started for this test run.

- Status field: When the entire test run is complete, a date/time stamp is displayed. Until that occurs, the 'In Progress' status is shown.

- Assigned To field with Change assignment link: The current user name the test run is assigned to is displayed, and under it is a link that causes a selection menu to appear if you want to assign it to a different user to complete.

- Total Components and Status counts: The number of components that have been added to the test run on the first line, with the number of them that are in each state shown on the three lines under it.

- Total Pages and Status counts: The number of pages that have been added to the test run on the first line, with the number of them that are in each state shown on the three lines under it.

- Components and Pages panel tabs: The currently-displayed panel's tab is in the forefront, while the other has its tab label underlined to indicate it functions as a link to bring its panel from the background to the forefront and display its content below.

- Completion Progress bar: Hovering the mouse over the blue progress bar displays a popup percentage to indicate completion of all of the testing for the entire test run, based on both automated and manual testing for all components or pages that have been added to be tested.

- Test Unit Screenshots: These screenshots give you a view of what was tested without having to open up and review every test unit. This card has thumbnail images of 5 of test unit screenshots and a number designating the number of additional screenshots. Selecting one of the thumbnails or the number to the right of the thumbnail images renders a carousel with all of the test unit screenshot images.

- Issues Logged and Items Flagged counts and links: A numeric value is displayed for each to represent the totals for all checkpoints for all components and pages in the test run, with links that display the Issues for... and Flags for... screens to view all details.

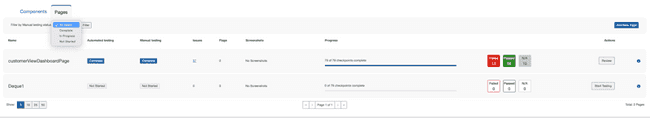

The Components and Pages tabs display "panels", which themselves display "sections" of summarized information about each component or page that has been added to the Test Run. Additionally, they include buttons and links that either allow you to view additional information or take further action. Note: The screenshot below uses a Components panel with a component section for demonstration purposes. Same information also applies to the Pages panel.

Note: The screenshot below uses a Components panel with a component section for demonstration purposes, but the same information also applies to the Pages panel.

Each Components or Pages panel section is comprised of the following 8 main features that correspond to the numbered items in the screen shot above:

- Filtering By Status: Refine the sections of components that have been added to this test run by their manual testing Status. For more information, see Filtering by Status.

- Add New button: Add a new component to this test run (and optionally, also to its associated test case). For more information, see Adding a New Component or Page.

- Automated & Manual Status: The status for each type of testing is displayed in a separate column.

- Issues & Flags links: The total number of issues logged and items flagged are displayed, and each is underlined to take you to the respective Issues for...and Flags for... screens to view all details. For more information about each, see Viewing a List of Logged Issues, and Viewing a List of Flagged Items.

- Screenshots: The total number of screenshots that the page or component contains.

- Progress: View the progress bar, and hover the mouse over it to display a popup of the percentage value of the total number of Checkpoint Tests that have been marked with a result for this component.

- Pass/Fail/NA counts: The total number of checkpoints marked with each is displayed for this component. For related information, see and Assigning Checkpoint Results.

- Action button: Depending on the status, the appropriate command appears on the label (Not Started > Start Testing, In Progress > Resume, Completed > Review). For complete instructions for each, see Start Testing a Component or Page, Resume Testing an In-Progress Component or Page and Review Completed Testing for a Component or Page.

Information button: View additional component details in a popup window such as the URL, Elements, Selector, and Instructions; and optionally, Send URL to the connected test browser tab.