Manual Testing

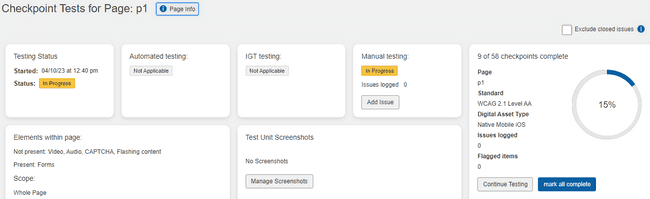

Manual testing is the primary purpose of axe Auditor. Guided information is provided within the user interface to walk you through the Deque Way of accessibility testing on individual Checkpoint Test screens organized by broad category. On those screens, you can read the testing methodology, take advantage of tools that allow you to interact with the page or component under test, log detailed information about issues you uncover, attach screenshots, flag items for review, and mark each with a result - pass, fail, or not applicable.

Manual testing can be:

- Initiated after skipping automated tests

- Initiated on the Prepare Page for Manual Testing screen

- Continued from the "Checkpoint Tests for" Page (or Component) screen

- Performed without a connection to a test browser

- Performed without any automated testing

- Performed either before or after automated testing

- Completed without running any automated tests

Adding a Manual Issue

To add a manual issue:

Under the Manual testing field, click the Add Issue button.

Note: On an individual Checkpoint screen (for example, Text Alternatives for Active Images 1.1.1.a), click the Add Issue button located just under the screen heading.

The Add New Issue dialog box (a pop-up window) appears in the foreground, with multiple entry and selection form fields along with a Save button.

- Enter text in the Summary field that briefly summarizes the nature of the issue. This field is disabled for entry if the checkbox, Use Description as Summary is selected.

- Select the checkbox, Use Description as Summary if you want the summary to be the same as the description mentioned in the field below. This is selected by default.

- In the Checkpoint field, click the down arrow, then select the applicable checkpoint from the list if different from the currently-accessed checkpoint (which is automatically selected and populated in this field by default.

- Flag for review: Select this checkbox to automatically flag this item for review when deemed necessary. Note: This causes a Flag reason text box to appear below the field, allowing for entry of a reason the item is being flagged for review.

- Populate the fields on the Add New Issue dialog box form as desired.

Refer to the following descriptions:

- Description: 'Create my own description' is selected by default. Based on your selection in the Checkpoint field, the list is automatically populated with related issue items. Select the most appropriate option. If you have the checkbox Use Description as Summary selected before, the summary field gets automatically populated with the same description in the Description field.

- Details: This is a free-form text entry field you can use as desired to record any additional information not included in the Description field. It can include remediation advice, for example.

- Issue type: Refer to the following descriptions prior to making a selection:

- Accessibility: The issue impacts the ability for a disabled user to access content or functionality of the site. Fails the checkpoint test.

- Best Practice: The issue impacts the ability for a disabled user to access content or functionality of the site, but does not fail the checkpoint test. This is not considered a violation.

- User Agent: The issue is a result of the user agent interaction with the page, not necessarily the page content itself.

- Functionality: The issue is a result of a problem with the functionality of the page and should be considered a functional defect.

- Usability: The issue impacts the ability for all users to access content or functionality of the site.

- In the Impact field, click the down arrow, then select the applicable impact level from the list. Note: Critical is selected by default. Refer to the following descriptions prior to making a selection:

- Critical: This issue results in blocked content for individuals with disabilities. Until a solution is implemented content will be completely inaccessible, making your organization highly vulnerable to legal action. Remediation should be a top priority.

- Serious: This issue results in serious barriers for individuals with disabilities. Until a solution is implemented some content will be inaccessible, making your organization vulnerable to legal action. Users relying on Assistive Technology will experience significant frustration when attempting to access content. Remediation should be a priority.

- Moderate: This issue results in some barriers for individuals with disabilities but would not prevent them from accessing fundamental elements or content. This might make your organization vulnerable to legal action. This violation must be resolved before a page can be considered fully compliant.

- Minor: This is considered an issue that yields less impact for users than a moderate issue. For a page to be considered fully compliant this issue must be resolved but can be dealt with last.

- Blocker: Results in catastrophic roadblocks for people with disabilities. These issues will definitely prevent them from accessing fundamental features or content, with no possible work arounds. This type of issue puts your organization at high risk. Prioritize fixing immediately, and deploy as hotfixes as soon as possible. Should be extremely rare.

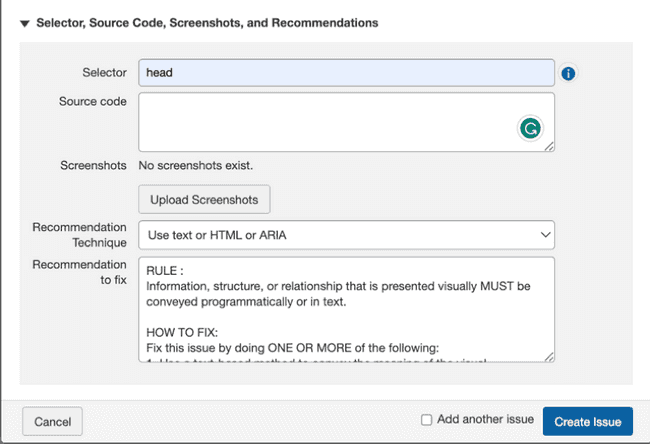

In the Selector, Source Code, Screenshots, and Recommendations section, fill in the following details:

- Selector: Enter a CSS selector name attached to an element on a page which can be used to target that element. You can use a simple selector (class, ID, etc) or a selector path.

If you are using CSS IDs, ensure you start the ID with a #. For a CSS class name, start with a period or full stop(.). Examples: #myidname or .myclassname. For more information, see CSS Selector Process.

- Source Code: Paste in relevant source code from the page under test as necessary.

- Screenshot: Click the Upload button, browse for and select the desired image file, then click Open. For more information, see Adding a Screenshot to an Issue.

- Recommendation Technique: Select a Recommendation type from the dropdown box. You can write your own recommendation technique to fix the issue, or select an alternative text as image changes.

- Recommendation to Fix: In this free-form text entry field, type as desired to record any additional information not included in the Description field. This can include remediation advice. If you have selected the option, 'Select an alternative text as image changes' in the Recommendation Technique field, this field gets automatically filled with the details.

- Check the Add another issue box to display another Add New Issue dialog box form upon saving the present one, if desired.

- Click the Create Issue button.

The Manual testing field in the Testing Status section automatically updates the '# issues logged' count with an updated value, the selected checkpoint screen displays a '# Issue logged' update, and a 'Issue saved successfully...' confirmation message appears at the top, right of the Checkpoint Tests for hub screen.

Related procedure: Issues can also be added from any individual Checkpoint page. For more information, see Adding an Issue (Checkpoint-Specific).

Importing Automated and IGT issues

Axe Auditor users can import issues from axe DevTools Pro extension (exported as a JSON file) at the test unit level, enabling them to import automated and IGT issues in one operation. Ensure that the axe-core version in the axe DevTools Pro extension matches the axe-core version for the test run in axe Auditor. The two systems must use at least the same minor version of axe-core.

For example, see the table below:

| axe Auditor axe-core | axe DevTools Pro axe-core | Import Allowed |

|---|---|---|

| 4.6.3 | 4.6.3 | Yes |

| 4.6.2 | 4.6.3 | Yes |

| 4.5.3 | 4.6.3 | No |

| 4.6.3 | 4.5 | No |

Note - multiple runs of IGT in axe DevTools imports: If you are using the multi-run feature in axe DevTools extension (Pro feature) and using the export to be imported into axe Auditor Test Run, it can be imported into axe Auditor with the issues imported being the total of all issues across multiple runs (after manually removing the duplicates).

The two different types of issues (other than manual issues) that a test unit can include are:

- Automated issues - from within Auditor, or from a JSON file uploaded by the user.

- IGT Issues - All the issues axe devTools Pro extension can find while running IGT tests from a JSON file uploaded by the user are marked as IGT issues.

On the Test Unit Overview page, use the section called IGT Testing to import IGT issues. This section contains:

- The status of the IGT testing indicating the number of IGT tests completed,

- The number of issues logged, and

- An Import Issues button that allows the user to upload a JSON file in a pre-defined format provided by Deque Systems.

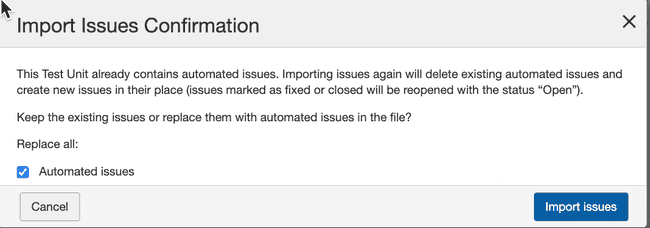

Clicking the Import Issues button brings up the Upload confirmation dialog box, where you can import IGT issues using a JSON file. To generate the JSON file, use the JSON option in the axe DevTools extension (Export → Saved Test and Issues → JSON option).

Note 1: While importing IGT issues, you can choose to keep the existing automated issues or replace them with automated issues in the file, if the test unit already contains automated issues.

Note 2: When the digital asset type is not Desktop Web or Mobile Web, you cannot run automated testing or import IGT issues. The buttons on the Test Run Overview page display "Not applicable" instead of "Run Automated Tests" and "Import Issues" for asset types that are not Desktop Web or Mobile Web.

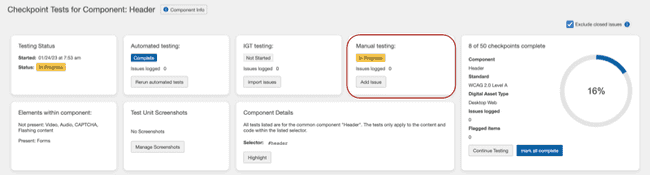

Rerunning Automated Tests

When automated testing has already been run on a component or page, but you've made changes to that page, and the associated test run has not yet been completed, you can "re-run" the automated tests from the Checkpoint Tests for Page (or Component): <Page (or Component) Name> screen.

Before You Begin: You must first navigate to the desired Checkpoint Tests for Page: <Page Name> screen (Test Runs > Test Run Overview: <Page Name> > Resume). For more information, see Resuming a Test Run.

To re-run automated tests:

- On the Checkpoint Tests for Page: <Page Name> screen, activate the Rerun automated tests link from the Testing Status section of the screen.

- On the Rerun Automated Tests Confirmation popup window, activate the Yes, Run Again button.

- On the Prepare Page for Automated and Manual Testing screen, activate the Send URL button.

- The page to be tested is displayed in the connected testing browser.

On the Prepare Page for Automated and Manual Testing screen, activate the Start Testing button.

Note - Alternate Starting Point - Automated Testing screen: You can also rerun automated tests by clicking the Rerun Automated Tests button at the bottom, left of the Automated Testing screen: